Overview

The rapid advancement of generative AI models, such as GPT-4, industries are experiencing a revolution through AI-driven content generation and automation. However, this progress brings forth pressing ethical challenges such as data privacy issues, misinformation, bias, and accountability.

According to a 2023 report by the MIT Technology Review, a vast majority of AI-driven companies have expressed concerns about AI ethics and regulatory challenges. This highlights the growing need for ethical AI frameworks.

What Is AI Ethics and Why Does It Matter?

Ethical AI involves guidelines and best practices governing the responsible development and deployment of AI. Without ethical safeguards, AI models may lead to unfair outcomes, inaccurate information, and security breaches.

For example, research from Stanford University found that some AI models exhibit racial and gender biases, leading to unfair hiring decisions. Addressing these ethical risks is crucial for maintaining public trust in AI.

Bias in Generative AI Models

A significant challenge facing generative AI is inherent bias in training data. Since AI models learn from massive datasets, they often inherit and amplify biases.

The Alan Turing Institute’s latest findings revealed that AI-generated images often reinforce stereotypes, such as associating certain professions with specific genders.

To mitigate these biases, developers need to implement bias detection mechanisms, integrate ethical AI assessment tools, and establish AI How AI affects public trust in businesses accountability frameworks.

Misinformation and Deepfakes

Generative AI has made it easier to create realistic yet false content, creating risks for political and social stability.

For example, during the 2024 U.S. elections, AI-generated deepfakes were used to manipulate public opinion. A report by the Pew Research Center, 65% of Americans worry about AI-generated misinformation.

To address this issue, businesses need to enforce content authentication measures, ensure AI-generated content is labeled, and collaborate with policymakers to curb misinformation.

Data Privacy and Consent

Protecting user data is a critical challenge in AI development. Many generative models use publicly available datasets, which can include copyrighted materials.

A 2023 European Commission report found that 42% of generative AI companies lacked sufficient data safeguards.

For ethical AI development, companies should implement explicit data AI accountability is a priority for enterprises consent policies, ensure ethical data sourcing, and maintain transparency in data handling.

Conclusion

Navigating AI ethics is crucial for responsible innovation. From bias mitigation Ethical AI ensures responsible content creation to misinformation control, businesses and policymakers must take proactive steps.

As generative AI reshapes industries, companies must engage in responsible AI practices. With responsible AI adoption strategies, AI innovation can align with human values.

Heath Ledger Then & Now!

Heath Ledger Then & Now! Marcus Jordan Then & Now!

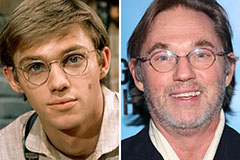

Marcus Jordan Then & Now! Richard Thomas Then & Now!

Richard Thomas Then & Now! Tina Louise Then & Now!

Tina Louise Then & Now! Ryan Phillippe Then & Now!

Ryan Phillippe Then & Now!